Designers increase the number of processors and shrink the number of databases in their never-ending quest to make a more realistic virtual world for military simulation.

By Ben Ames

Improved displays and screens are helping engineers build sharper pictures, but the greatest improvement in military simulation and mission rehearsal has been in software. Military users strive to feed the simulators with real-world, nearly real-time data freshly collected from satellite-based sensors.

They also want to shrink the size and weight of the machines, so they can transport them closer to the battlefield.

Already, an Apache Longbow attack helicopter simulator fits inside a shipping container, so helicopter pilots in forward-deployed areas can rehearse their missions with actual topographical landmarks.

Elsewhere, some U.S. Army vehicles now have embedded training, with a simulator built into a Bradley Fighting Vehicle. In October, BAE Systems of Nashua, N.H., selected the Thermite Tactical Visual Computer (TVC) from Quantum3D in San Jose, Calif., for the BAE Systems Bradley A3 Embedded Tactical Training Initiative (BETTI).

That type of system enables soldiers to train with realistic-looking simulated terrain while they are sitting in their vehicles in the belly of a C-130 cargo plane, speeding to the site. When the airplane’s ramp drops to the runway, they drive directly from the virtual world into the real one.

Pilots fly virtual Apache helicopters through a scene created with CAE’s Medallion-S database.

For this practice to work, the simulation must have enough fidelity to earn troops’ confidence that they can duplicate their simulated lessons in the heat of battle.

“The name of the game is ‘How real is the immersion?’ ” says Frank Deslisle, vice president of engineering and technology at the L-3 Link Simulation & Training segment in Arlington, Texas. “Our key challenge is to replicate scenarios and represent the physical world in the display environment.”

Simulation designers at Link achieve that goal through four technology areas: the display mechanism such as a screen or prism; image generation and rendering; a fixed-environment database including terrain, roads, and buildings; and a dynamic behavior database, such as weather, humans, and explosions.

Building a better virtual world

Link engineers are always trying to pump more data into a system to build a realistic picture.

“Training scenarios used to be military-versus-military in set geographic battlefields. But today’s scenarios are all civilian and urban; now they want Baghdad, with civilians, IEDs, and ambulances. That is the number-one demand right now,” Deslisle says.

To create that scenario, simulator designers rely on classified government information to build a database of the digitized world. Depending on the company’s clearance, military customers will provide data from the National Geospatial-Intelligence Agency in Bethesda, Md. That data includes much more than simple roadmaps-it uses multispectral, high-resolution, submeter imagery from satellites.

The next problem for simulator designers is how to handle so much data. Recent advances have cut that development time sharply.

“As opposed to taking one or two years to build a geospecific area, now it’s down to a matter of days, or maybe up to two months, depending on how much data you want,” he says.

Still, the designers are not finished. They must add dynamic variables such as recent weather, UAVs circling above, nearby allied troops, U.S. ground troops and target spotters, and enemy snipers in buildings.

“Then you add crowd behaviors, such as how a crowd disperses when an IED goes off-a noncombatant civilian will run away, but a terrorist or militant might act differently,” Deslisle says. “We use semiautomated forces, so we can program certain fighting doctrines.”

Video game technology

Modern video games, such as commercial flight simulators, also strive for high fidelity in realistic environments, yet military simulator designers cannot borrow much technology from them, he says.

First, a consumer video game involves a very small, contained virtual environment-a straight-ahead view on a computer or television screen. Also, a military simulation environment contains more than just visual mapping; it also has to correspond to a pilot’s full sensor suite, including infrared, radar, and electro-optical cues. Finally, consumer video games deliver scenes that go beyond reality, exciting the user with beyond-reality movement, letting him move faster and jump higher than humans can.

“You don’t want to do that with military simulators because it would get pilots killed. We must keep our behaviors and models physics-based,” he says.

About the only common technology is hardware, such as graphics accelerator cards from companies like Nvidia Corp. in Santa Clara, Calif., or ATI Technologies Inc. in Markham, Ontario.

“We use commercial graphics cards, but we use a lot more of them; we package four, six, or eight of them ganged together,” Deslisle says. “They release a new card every six months, so we’re riding the COTS curve, driven not by the DOD [U.S. Department of Defense] but by commercial investment.”

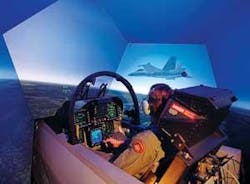

A Greek pilot flies his F-16 in a golf-ball-shaped SimuSphere trainer from L-3 Link.

Another challenge for military simulators is the helicopter-the most difficult fighting platform to simulate, he says.

A user in a simulated ground vehicle or on foot can see just two or three miles, so that limits the amount of data the simulation needs. Likewise, a user in an F/A-18 or B-52 jet aircraft simulator can see a long way, but his altitude precludes seeing many details.

A helicopter is the worst of all worlds: the user can see a blade of grass on the ground, then hover high enough to see miles of fast-changing detail. And helicopters are agile; they can move quickly and then hide behind trees, so their simulated worlds must be complex.

The mechanism of display

Military customers always ask for ever-smaller displays so they can transport the entire system close to each battlefield. That way, pilots can train for specific missions using sensor data recently collected from the environment they are about to enter.

The state of the art in permanent displays is an actual cockpit transferred inside a 30- or 40-foot diameter dome. The entire golf-ball-shaped structure mimics motion with hydraulic legs, while projectors play films of the virtual world on the inside of the dome wall.

Engineers at Link now make a smaller version of the dome simulator called the SimuSphere visual system display. The design is a dodecahedron geometric shape, built with pentagonal facets that are each the same shape, size and distance from the pilot’s eye point. Customers can build this modular system with three, five, or seven facets for partial immersion, or nine facets for a full 360-degree view.

In September, L-3 Link Simulation and Training delivered a SimuSphere-based F-16 training device to the Greek air force, enabling pilots to practice air-to-air and air-to-ground combat, takeoffs and landings, low-level flight, emergency procedures, and in-flight refueling.

Also in 2005, L-3 Link delivered a SimuSphere-based F/A-18 Hornet fighter-bomber training system to Oceana Naval Air Station in Virginia Beach, Va., and another to Lemoore Naval Air Station in Lemoore, Calif. Each four-ship system will allow F/A-18C pilots to train as a tactical team.

Army pilots will also train on L-3 Link machines, as part of the U.S. Army’s Flight School XXI, a program to train 1,200 student pilots per year for combat missions in the UH-60A/L Black Hawk, CH-47D Chinook, AH-64D Apache Longbow, and OH-58D Kiowa Warrior.

Under a $471.7 million subcontract with Computer Sciences Corp., Link will deliver 19 advanced aircraft virtual simulators (AAVS) and 18 reconfigurable training devices to the Army Aviation Center at Fort Rucker, Ala., over a 57-month period ending in 2008.

Such systems work well, but are not portable; pilots must travel to a fixed location for training. Another drawback is the parallax problem, when side-by-side pilots see slightly different pictures on the dome wall.

The most recent simulator from L-3 Link is the advanced helmet-mounted display (AHMD), due to enter production in the first quarter of 2006. Instead of sitting inside a dome, pilots wear miniature projectors attached to their own flight helmets. Compared to a dome, it gives the same field of view-what they actually look at-and field of regard-what they would see if they quickly turned their heads.

The AHMD offers 100-degree horizontal by 50-degree vertical field of view, with a 30-degree binocular overlap region that induces a sense of immersion. It also has 60-percent see-through capability so users can view their cockpit controls or read maps in high ambient lighting.

Another challenge was to reduce latency or “smearing” by picking the best liquid-crystal displays (LCDs). “Pilots turn their heads fast, and if they see smearing, they’ll walk out of the simulator,” Deslisle says.

Finally, each system is tethered with a thin fiber-optic cord to a server, making the entire machine more portable than previous simulators, he says. “Now they can do training and mission rehearsal en route, or even in theater,” Deslisle says. “They are not restricted to a training center,” he says. “That’s the goal, and now the technologies are converging on that.”

Merging databases

Soldiers in the U.S. Army’s 160th Special Operations Aviation Regiment-Airborne (SOAR) at Fort Campbell, Ky., use flight simulators to explore the world’s battlefields virtually before actually deploying.

They demand the latest displays and databases available to power the helicopter simulators. Whether they are flying an MH-47G Chinook, MH-60K/L Black Hawk, or A/MH-6 Little Bird, they demand accuracy to the last detail.

“The whole goal is to forget that it’s a simulation, to get immersed in virtual reality and five minutes later, forget that you’re in a building somewhere,” says Dave Graham, director of special operations forces programs at CAE in Tampa, Fla. CAE is prime contractor for the 160th’s flight simulators.

To boost realism, Army trainers use classified databases to feed the flight simulators with scenarios that match the actual terrain that soldiers will encounter on their next mission.

That is nearly impossible to achieve with conventional simulation technology because of the time delay in merging databases. For example, a high-fidelity simulation could use as many as 13 different applications, including one database for radar signals, another for out-the-window scenery, and a third for threat simulation.

“The problem is, you can’t correlate those in less than months and months of time. So you can’t use them for mission rehearsal,” Graham says.

It is much easier to build realistic flight simulators for commercial pilots because the scenarios do not have to represent real-time sensor data.

In September CAE won contracts for C$58 million to build three Boeing 737 flight simulators-one for the Japan Airlines training center at Tokyo’s Haneda Airport, and an Embraer 170 simulator for Finnair’s training center in Helsinki, Finland.

All four units will be Level D flight simulators-the highest performance rating for flight training equipment given by the U.S. Federal Aviation Administration. Each will use CAE’s Tropos visual system, which uses satellite imagery as well as sophisticated weather and lighting effects to provide realistic training scenarios for pilots.

The problem with designing military simulators is even more challenging when soldiers connect several simulators in different locations to train as a team of helicopters, building on DARPA’s original concept of SIMNET (simulated networking).

Even on Fort Campbell’s high-end systems, engineers struggled to merge Lockheed Martin’s Tactical Operation Scene (TOPSCENE) satellite-imagery database with CAE’s Medallion-S weather and weapons database. CAE solved the problem with a hybrid called Medallion S/TDS (TOPSCENE data server).

Now engineers at CAE have found a better way. Under contract to the Army’s Program Executive Office for Simulation, Training and Instrumentation, CAE will soon deliver a common environment/common database to the 160th regiment.

Any subsystem that complies with this common-database (CDB) standard can be smoothly stored and retrieved with all the others.

“Because there’s only one source, not two separate renditions, correlation is built-in. You should be able to look out the window and see the same thing that shows up on the radar,” Graham says.

CAE designers will use this standard in the MH-47 simulator they will deliver in September 2006 at Fort Campbell, and in an MH-60 simulator eight months later.

In October, designers at Northrop Grumman used the same strategy when they adopted an open database and image generator from MultiGen-Paradigm Inc. of San Jose, Calif. They will use it at Northrop Grumman’s Cyber Warfare Integration Network in San Diego to design and evaluate unmanned aerial vehicles.

The new database will deliver an open-format terrain map to support out-the-window and simulated sensor imagery for UAV demonstrations. It will export several versions of the database from one common source to provide correlated C4I (command, control, communications, computers, and intelligence), semi-automated forces, and visualization.

The other key enabling technology is sheer computing power, Graham says. “We’ve had a paradigm shift in simulation databases, but it depends on processors and networks that have only been available in the past year or so.”

For instance, CAE recently purchased an immense, 50-terabyte storage-area network (SAN), along with powerful, four-processor servers to help move that data around. They will deliver this system to Fort Campbell with the first simulator in the fall of 2006.

Their suppliers include IBM, of Markham, Ontario, for the SAN and the servers, and SEOS of West Sussex, England, an electro-optical services company that provided modified Barco projectors for the physical display.

COTS computers power high-end simulators

“The technology of simulation has taken a huge step in the last seven or eight years,” says Tony Spica, vice president of sales at RGB Spectrum in Alameda, Calif.

“Display technology has moved along, offering more choices, and video processors are getting better. Also, advances in software let us develop better scenes, such as downtown Baghdad. It doesn’t do you any good to train in downtown L.A.”

At the same time, increased use of commercial off-the-shelf (COTS) parts has enabled designers to build those simulators for less money.

“The cost of components has been driven down drastically in recent years,” Spica says. “They used to buy a $1 million SGI supercomputer to generate the out-the-window view, and now they buy a $5,000 PC and do a better job.”

Despite the cheaper parts, designers still struggle to provide perfect synchronization when they tie them all together.

“You need the ability to be real-time because the vehicle is moving so fast,” he says. “Latency happens when the pilot sees a difference in imagery on two different sources-you must marry the helmet HUD view to the out-the-window view.”

Engineers at RGB do this with products like the SynchroMaster 555, a “keyer” that can overlay data swiftly from different sources, placing data symbology on top of images, or infrared imagery over topology. It combines images from two high-resolution computer sources or image generators into a composite image. The two signals are digitized, synchronized, and combined at resolutions as fine as 1920 by 1200 pixels.

In a typical aircraft or helicopter simulation, one image generator produces the background and an out-the-window display, while a second image generator produces the foreground and a head-up display (HUD). The SynchroMaster 555 combines the two images into a pilot’s view through the HUD.

In previous designs, those data streams came from one supercomputer. Modern designers save money by breaking up the functions onto a collection of PCs, which requires them to frame-synchronize the results.

RGB does this with a technique called “genlock,” which sets one synch-pulse for all the different signals so each frame occurs at the same time. There can be many signals to conduct-an F-16 simulator has two 6-by-6-inch displays, and an Apache helicopter has four displays (two in front and two in back).

Using a chroma-key technique, the SynchroMaster makes one signal visible through the other wherever a color falls within a specified range. Either channel can be configured as the foreground or the background signal, and the output signal is genlocked to one of the two inputs.

A synchronized picture is crucial for instructors as well as pilots. “The instructor needs to see the exact same thing the student does, so he knows if the pilot fired a missile before his targeting reticles were overlaid on the picture, for example,” Spica says.

Simulation for one pilot is a difficult engineering challenge, but mission rehearsal makes it even harder. Military customers often want to fly four virtual F-16s and six virtual Apaches in the same simulation. In fact, the pilots are not even in the same location; they run group exercises with a pilot sitting at Luke AFB in Arizona, another at Langley AFB in Virginia, and a third at England AFB in Florida.

Instructors record the simulated mission

Just when engineers tie the entire package together, there is one more challenge-recording the entire adventure so instructors can replay it in a classroom the next day for debriefing.

RGB designers have developed a digital recording system for the F-35 Joint Strike Fighter simulator. To cover all of a cockpit’s displays and window views, this DGY product can record data from six sources in one digital stream, while an instructor puts bookmarks and typed notes in the margins.

The system runs at 30 frames per second with resolution of 1280 by 1024 pixels. The result is recorded as a digital stream in jpg2000 compression, then saved on a hard drive or RAID.

Future improvements in military simulation will happen in the recording stage, he says. Joint Strike Fighter pilots will train on the first digital simulator, using digital DVI instead of analog RGB signals.

“That improves the quality of what you see because you don’t have to convert A-D and D-A. So you get better image quality with less latency,” Spica says.

Supercomputers simulate missile flight

With clever engineering, portable flight simulators can run on commercial PCs, yet some jobs are so big they need a classic supercomputer.

In Huntsville, Ala., soldiers at the Army’s Space and Missile Defense Command (SMDC) use enormous supercomputers to model missile flight.

“Once it’s in flight, pieces come off the missile at different stages, such as the shroud cover that protects the seeker at launch. We have to make sure they get away from the missile,” says Charlie Wilcox, from Madison Research Corp. (also of Huntsville), the prime contractor that runs the simulation center for the Army.

“Since 9/11, our center is doing more threat analysis, using radar cross sections to discriminate between the good guy and the bad guy. We find how optical and radar technologies can detect the bogey you’re going after.”

In June, the center upgraded its X1E supercomputer and added an XD1 supercomputer, both from Cray Inc. in Seattle. Under terms of a contract with Madison Research from SMDC and the Army Forces Strategic Command, funding will come from the DOD’s High Performance Computing Modernization Program and the Missile Defense Agency (MDA).

The X1E upgrade will use 32 multistreaming vector processors, while the 1.27-teraflop Cray XD1 system will use 144 dual-core AMD Opteron processors networked with Cray’s RapidArray interconnect.

SMDC researchers use these supercomputers to run computational-fluid-dynamics (CFD) codes to generate aerodynamic models of missile configurations, and to simulate phenomena such as jet and shock interactions, combustion modeling, and propulsion flow.

“SMDC selected the Cray systems for their ability to run larger, more time-critical simulations and to produce higher-resolution engineering results,” says Larry Burger, director of SMDC’s Future Warfare Center.

“Capabilities are rapidly expanding, we’re seeing phenomenal growth. What we used to call a workstation is now a PDA; you can fill your pocket with 20 gigabytes at low expense. So we have to be aggressive to stay on top of the capabilities.”

Those researchers need all the computing power they can find. “Missiles fly faster than any wind tunnel can achieve, so we often do ‘sheet metal bending’-as you increase the speed, you start to get anomalies in the wind tunnel,” Wilcox says. “So we have to extrapolate, and move to the simulation environment. Then we slowly evolve the hardware and simulation to match the flight characteristics, to use it in scenario-building for engagement.”

In October, SMDC leaders added even more computing power. They contracted with Madison Research to install a 128-processor Altix 3700 Bx2 supercomputer from Silicon Graphics Inc. (SGI) in Mountain View, Calif.

The computer has a shared-memory design, which allows fast access to all data in the system, without having to move that data through I/O or networking bottlenecks, Burger says.

The Altix 3700 Bx2 uses a Linux operating system and SGI’s NUMAflex global shared-memory architecture. The latest version uses NUMAlink 4 interconnect technology to boost bandwidth between CPU bricks up to 6.4 gigabytes per second with less than 1-microsecond MPI (message passing interface) latency.

That shared-memory architecture is expensive. It costs three or four times what a common Myrinet network would cost, but because it is so much faster it’s still cost effective, they say.

Another supercomputer center for missile simulation runs at the Schriever Air Force Base in Colorado Springs, Colo., home of the Joint National Integration Center (JNIC), the DOD’s premier missile-defense wargaming center.

In September, Northrop Grumman Corp. won a 10-year, $1 billion contract from the MDA to continue its prime contractor role there. Researchers will use the system as a cost-effective means to perform end-to-end testing and integration of the Ballistic Missile Defense System (BMDS).

Supercomputers have special demands

Missile modeling demands more than pure speed; the systems also must be reliable. “Our focus is long-term stability,” says Wilcox. “Some of the codes run for six weeks, so we don’t want to interrupt that. You can’t allow breakage when you’re running a program for two months.”

The other main challenge for running a supercomputer is heat. “You can upgrade your system and generate four times the heat in the same floor space. We have to get forecasts from vendors for three to four years out, so we can plan for it,” Wilcox says. “A lot of centers are not ready to take on a large pile of processors. They have enough floor space for the computers but they can’t take them because they can’t get the heat out of the building.”