Executive Order 140281 on improving the nation’s cyber security directs each federal agency, including each military department, to “develop a plan to implement zero-trust architecture, which shall incorporate, as appropriate, the migration steps that the National Institute of Standards and Technology (NIST) within the Department of Commerce has outlined in standards and guidance.”

On the surface, that seems to be directed at federal enterprise networks, but the same principles can be applied to secure embedded computing systems.

The motivation for implementing a zero-trust architecture stems from the increase in network breaches for public and private enterprises. Traditional cyber security architectures attempt to establish and defend a network perimeter around a trusted computing environment using multiple layers of loosely connected security technologies.

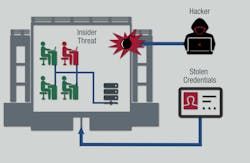

Contemporary threat actors, ranging from cyber criminals to state-funded hackers, have demonstrated the ability to breach network perimeter defenses, where they are free to move laterally over the network to other systems and gain largely unfettered access to systems and the data and algorithms contained within.

Beyond being susceptible to external threat actors, perimeter defenses do not protect well against stolen credentials or internal threats -- be they malicious or just careless. Malicious insiders can carry out fraud, theft of data and intellectual property (IP), and sabotage, which can include modifying or disabling functions, installing malware, and creating back doors.

Perimeter defenses still can play a role in protecting localized assets by acting as the first line of defense, but another layer is necessary to catch the perimeter breaches. A zero-trust approach shifts the emphasis from the perimeter of a network to the discrete applications and services within a network, building more specific access controls to those specific resources. Those access controls match the identity of users and devices to authorizations associated with those users and devices to ensure access is granted appropriately and securely.

Zero-trust architecture can apply to embedded computing systems even though those systems often have a different software environment from enterprise systems. The most secure embedded systems use a separation kernel to isolate applications into partitions. Those partitions cannot access data or code in other partitions, and only pre-approved information flow can occur between partitions. Embedded systems also have a different security environment, in terms of configuration and numbers of users and privileges.

Security environment in embedded systems

Most modern embedded systems connect to a network such as a cloud for data analysis or software updates, which leaves them vulnerable to attack. In addition to network-based attacks, there also are attacks during maintenance and insider attacks.

Yet many embedded systems have only loose security postures because of the perceived cost to implement strict security. When security is implemented, it is almost always perimeter-based security at the edge of the embedded system. That can be as simple as a user ID/password combination that can grant broad admin-level privileges or something slightly more sophisticated like a firewall. Embedded systems can benefit from a zero-trust architecture, but they have two significant differences from enterprise environments that impact the security solution.

The first big difference is that embedded systems have a stable set of applications, embedded processors, and communication paths. Adding new applications or devices is rare, and even upgrading applications or replacing failed devices happens infrequently.

The system integrator can lock-down the system configuration, not just the operating system but also the middleware and applications. Scheduling of trusted applications execution and approved communications paths can be defined statically in a configuration file used at boot time.

Integrity testing is still advised to detect if any software component gets altered. Note that embedded systems can benefit from the availability of multiple static configurations. During runtime, systems can choose between these pre-approved configurations to adapt to a component failure or a change in operational mode. Anytime a runtime configuration change is invoked, the security functions have the additional requirement to continuously maintain a secure state before, during, and after the configuration change.

The second significant difference between embedded and enterprise systems is that many embedded systems operate autonomously or with a minimal number of users and roles. This results in two broad implications for secure operation. First, minimal administrative functionality and activity are needed to support the system. Second, due to the minimal administrative activities required for the system, additional assurance measures are inherently required to ensure the highly robust autonomous execution of the security management functions.

These differences in environment and use cases enable embedded systems to tailor the security solution more easily than in an enterprise environment.

Zero-trust principles

Zero trust is designed to reduce the chance of unauthorized access and prevent malicious lateral movement within a network while protecting users and assets even when they are outside the perimeter defenses. A zero-trust architecture moves away from the default policy of “trust but verify” to a policy of “never trust, always verify.”

Every user, device, application, and data flow is verified, whether inside or outside a traditional network boundary. The other core concept of zero-trust is the principle of least-privileged to be applied for every access decision. Common to many security approaches, the principle of least privilege states that a subject should be given the minimum access level required to perform a given task.

The National Security Agency (NSA) defines three guiding principles for zero-trust,4 and the DoD zero-trust Reference Architecture adds another for monitoring and analytics:

Never trust -- always verify

Treat every user, device, application, and data flow as untrusted and potentially compromised, whether inside or outside a traditional network boundary. Authenticate and authorize each one only to the least privilege required to get the task done.

Assume breach

Consciously operate and defend resources with the assumption that an adversary already has breached the perimeter and is present in the network. Deny by default, and scrutinize every request and requester.

Verify each action explicitly

Use multiple attributes (dynamic and static) to derive confidence levels for contextual access decisions to resources.

Apply unified analytics

Apply unified analytics for Data, Applications, Assets, and Services (DAAS) to include behavioristics, and log each transaction.

Separation kernels for embedded security

A well-accepted foundation for embedded security is the use of partitioning to run applications in separate isolated partitions. Apart from microcontrollers and digital signal processors, most CPU chips used in embedded systems include a memory management unit (MMU) that can be used for hardware-enforced partitioning of memory for different applications.

Although that MMU can be controlled by a general-purpose operating system (OS) or an industrial real-time OS (RTOS), the most secure systems use a separation kernel. A separation kernel is a specific type of microkernel where the essential functions running in privileged kernel mode are only the most critical security functions.

Some embedded systems implement an intermediate security solution using a hypervisor, and some hypervisors are built using separation kernel principles. The main difference is that a hypervisor includes virtualization in the trusted computing base (TCB) along with the isolation functionality, whereas a separation kernel minimizes the TCB by implementing virtualization in user space.

A separation kernel is a minimized OS kernel whose only function is to enforce four basic security policies: data isolation, fault isolation, control of information flow, and resource sanitation. All other OS services are moved to user space so that the code size and attack surface of kernel-mode code is the absolute minimum.

One way to think of this is applying the principle of least privilege to the OS itself. Software components with the most extensive attack surfaces and the largest number of vulnerabilities, such as the networking stack, file system, and virtualization, can execute effectively in user mode, so the security policy should not allow them to execute in privileged kernel mode.

Hypervisors add virtualization to the kernel code, generally for increased virtualization performance at the expense of a larger attack surface. That larger code base is much harder to secure, let alone prove to be secure.

With only the separation kernel running in privileged kernel space, all other software runs in partitions in user space. The separation kernel divides memory into partitions using a hardware-based MMU and allows only carefully controlled communications between non-kernel partitions.

Applications run in partitions, and each partition includes any OS services and middleware needed to support the application (figure 2). Shared OS services, such as networking stacks and file systems, may have a client-server architecture where the server runs in its own partition and the client side runs in one or more application partitions. The INTEGRITY-178 tuMP RTOS from Green Hills Software is an example of a secure RTOS that has OS services and a virtualization layer running on top of a secure separation kernel.

The fundamental security policies enforced by a separation kernel -- data isolation, fault isolation, control of information flow, and resource sanitation -- map well to zero-trust principles. Layered security extensions in the OS services provide additional capabilities.

Assume Breach requires defending resources with the assumption that an adversary already has breached the perimeter and is present in the network. That includes denying by default and scrutinizing every request and requester. A separation kernel isolates every application in a partition, denying data access by default into and out of the partition. A separation kernel goes even further by preventing any action or fault in a partition from affecting any other part of the system.

Never Trust -- Always Verify requires authorization of each user, device, application, and data flow only to the least privilege required to get the task done. A separation kernel has the security property of being “always invoked” and enforces the principle of least privilege on every access. An authenticated load process can be used for an application and even the separation kernel itself.

Verify Each Action Explicitly uses multiple attributes to derive confidence levels for contextual access decisions to resources. A separation kernel does not allow dynamic access requests but instead enforces security policy through a static configuration file. In that way, it only allows pre-approved information flow regardless of privilege level.

Apply Unified Analytics requires logging all access requests and using analytics to look for suspicious behavior. Separation kernels can include audit logging, which collects the data needed for analytics. The analytics engine itself is beyond the scope of a separation kernel or even the OS services.

Given these mappings of zero-trust principles to separation kernel security policies, the INTEGRITY-178 tuMP RTOS can form the foundation of a zero-trust architecture for an embedded system. For programs that require more than zero-trust, the INTEGRITY-178 tuMP RTOS has been used as the foundation for a tactical cross domain system that meets NSA’s “Raise the Bar” set of cross domain requirements.

Richard Jaenicke is director of marketing for Green Hills Software in Santa Barbara, Calif. Contact him at [email protected].