State-of-the-art processors, fast networking and data sharing, open-systems standards, innovations in cooling and thermal management, and new systems architectures are converging on high-performance embedded computing systems to create new enabling technologies in real-time processing, artificial intelligence (AI), and machine learning for applications in image recognition, radar, electronic warfare (EW), signals intelligence (SIGINT), and more.

While these innovations promise new aerospace and defense capabilities that are almost beyond the imagination, these technologies also confront systems designers with challenges in packaging for small size, weight, and power consumption (SWaP), electronics cooling, and data security, and also threaten to outstrip expected new advantages in open-systems standard ecosystems sooner, rather than later.

Advanced processors

Much of the innovation in high-performance embedded computing starts with microprocessors, which range from central processing units (CPUs), field-programmable gate arrays (FPGAs), general-purpose graphics processing units (GPGPUs), analog-to-digital (A/D) converters, and digital-to-analog (D/A) converters.

Intel has scalable processors, which are targeted at the data centers," explains Denis Smetana, senior product manager at the Curtiss-Wright Corp. Defense

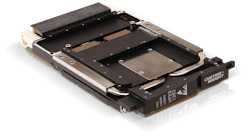

Solutions segment in Ashburn, Va. "These are really high power, and difficult to cool. Yet Intel has second-tier processors called the Xeon D family that have server-like features, but that are scaled down for the embedded market." The Curtiss-Wright CHAMP XD-4 DSP processor card utilizes these devices.

"The Xeon D brings server-class performance sized to the embedded market. Our CHAMP-XD4 uses four 100-gigabit Ethernet links to connect these devices into the backplane fabric," Smetana continues. "One aspect of the high core count Ice Lake D is that you can scale from 12 to 20 cores enabling a wide range of processing capability. The CHAMP-XD4 doubles the amount of memory bandwidth and I/O bandwidth of previous generations of processors as well as other dual Ice Lake products on the market today."

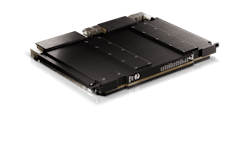

Curtiss-Wright also offers an FPGA board called the CHAMP FX-7, which has two AMD Versal FPGA-based systems on chip (SoC) for adaptive systems that combine floating-point processing and FPGA logic. "The other alternative is GPGPUs, with large arrays of processors which can be used for image or sensor co-processing." Smetana says. "These can be coupled with processor or FPGA cards like the CHAMP XD-4 and CHAMP FX-7, utilizing PCI Express running at Gen-4 speeds to provide a 30-gigabyte data path in both directions.

Industry demand in increasing for high-performance processors like FPGAs and GPGPUs. "There is a high uptick in GPGPUs being used," says Mark Littlefield, director of system products at Elma Electronic in Fremont, Calif. "These processors and switches can support time-sensitive embedded computing, even though some people have been tentative about high-speed Ethernet."

Ken Grob, director of embedded technologies at Elma Electronic, says he agrees. "GPGPUs are being used more often, and we are seeing that applied to non-traditional uses cases where the GPGPU is used more in video applications. We also are seeing GPGPUs used in signal processing, using an algorithm that once was implemented in an FPGA before, but now we are see it in GPGPU. You can do that quicker and with less overhead with GPGPUs."

Where in the past there were no alternatives to FPGAs for high-performance embedded computing, some systems designers today are turning to GPGPUs," Grob says. "From one FPGA environment to another the dependencies are more difficult when moving from one flavor of FPGA to another. In the FPGA, the algorithm is implemented in software, however, and running on a GPGPU library is much quicker to port that from a software point of view, rather than in VHDL from one FPGA environment to another."

Another FPGA challenge that GPGPUs are seeking to solve is the availability of human FPGA programmers. "FPGA development talent is expensive and difficult to find," Elma's Littlefield points out. "There are just not that many people out there who have studied FPGAs enough to be effective developers. It is a different talent, and a different beast entirely. "GPGPUs are getting so capable in performance that it opens the door to other alternatives," Grob says.

Networking and data sharing

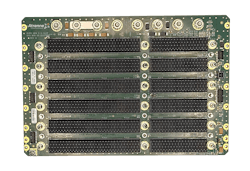

Two of the most influential network fabrics for high-performance today are Ethernet and PCI Express, also known as PCIe. Today embedded computing systems

"Right now we are looking at going from PCI Express Gen 3 performance to Gen 4 and Gen 5, and also to Ethernet," says Bill Hawley, senior hardware engineer at Atrenne Computing Solutions, a Celestica company in Brockton, Mass. "We see demand from customers form PCI Express Gen 4 and Gen 5, and I see that continuing. The need for bandwidth and speed is something that will continue." Systems designers typically use PCI Express links to connect components on circuit boards, or when data movement must be as deterministic as possible.

When moving data from board-to-board, or from enclosure-to-enclosure, Gigabit Ethernet is the default choice, where nabling technologies are moving from 10-Gigabit Ethernet, to 40-gigabit-Ethernet, to 100-Gigabit Ethernet, and beyond.

Demanding aerospace and defense applications are driving the need for increasing network bandwidth. "Real-time applications are the drivers -- things that must be as expeditious as possible, and speeds close to now," Hawley says. Radar applications, for example, "need data sooner rather than later so I can respond. That's why we are seeing the emphasis on performance."

Gigabit Ethernet

Ethernet has emerged as the data plane of from a previous hodge-podge of switch fabric topologies, which in past years included Serial RapidIO, InfiniBand, Fibre Channel, StarFabric, and Firewire. "Everything we've seen so far has been Ethernet, or a derivative of Ethernet, that is data plane related, with PCI Express on expansion plane, Atrenne's Hawley says.

"The data plane connects through a packet switch, and all boards in a system can communicate over the data plane, which is Ethernet," Hawley continues. "Ethernet is a very flexible architecture, but the problem is Ethernet requires a lot of software intervention, so the latency of Ethernet packets can be large, versus PCIe that takes two or three modules that takes more than one payload module. The latency issues with Ethernet have to do with its large software stack. That is not there with PCIe. It's all handled in hardware, which is a good real-time thing, as opposed to software."

The road to Gigabit Ethernet has not been easy, however. v100 Gigabit Ethernet has not been trivial to implement," Littlefield points out. "It has had signal-integrity issues, and it's been more of a challenge to get everything lined up with the board products and backplanes. People today, however, are adapted to that, and are working through getting all those bits and pieces together."

The shift in the embedded computing industry towards networked architectures, rather than conventional databus architectures, is driving the growth of Ethernet, says Aaron Frank, senior product manager at Curtiss-Wright.

"We've seen a growth from 10-, to 40-, to 100-Gigabit Ethernet technologies, driven by a net-centric approach where more of the data is being put across Ethernet, so Ethernet continues to grow," Frank says. Still, Gigabit Ethernet represents only part of the path forward because systems designers simply cannot brute-force data over the network and expect the best possible results.

"It's not just enough to put a 100-Gigabit network on a system," Frank says. "The system has to be able to ingest, process, and deliver that much data, in terms of results. It's becoming a system-level end-to-end solution to use these faster speeds and interfaces, with the growing use of optical interfaces. We used to deal with 1 gigabit Ethernet, copper could keep up, but now more of the interfaces are moving to optical. We are doing many things to make it easier for the systems integrator to use optical interconnects."

Open-systems standards

Perhaps the most influential emerging open-systems standards on high-performance computing today are the Sensor Open Systems Architecture (SOSA) and the Modular Open Systems Approach (MOSA) design guidelines. These standards seek to create an open ecosystem in which components from many vendors will function well together, facilitate rapid upgrades over time, and save money over the life cycle of embedded computing systems.

"People are able to make use of technology faster with SOSA," says Elma's Littlefield. Although industry experts have expressed skepticism about the value of SOSA since the standard's inception, "That skepticism has gone by the wayside at this point," Littlefield says. "It took us three years to get to that point."

The SOSA standard and the MOSA design approach have convinced even the industry's doubters because of their proven utility. "The market knows what toThe ability for several different vendors to provide interoperable components in different aerospace and defense systems is a chief motivation of SOSA and MOSA. SOSA requirements are focusing on their certain slot profiles, and subsets and combinations of these profiles that are seeing a lot of commonality," says Justin Moll, vice president of sales and marketing at Pixus Technologies in Waterloo, Ontario.

"One thing that is popular now is our SOSA-aligned chassis hardware manager," Moll says. "We provide the pinouts if the customer provides his own backplane. SOSA committees have the goal of incorporating compatible chassis management hardware using similar design approaches. These types of things are being proposed in the SOSA committees, and there needs to be agreements on connectors and pinouts. It is not defined in SOSA, but the committees will define it."

Moll also calls attention to a SOSA sister standard called the Modular Open Radio Frequency Architecture (MORA), which concerns RF and microwave systems rather than embedded processing. "That's where using standardized or open-standard solutions for RF are being developed. We have ruggedized a lot of National Instruments ubiquitous software-defined radios, and designed it into weather-proof IP-67 versions for outdoor use, as well as full-mil-rugged versions with 38999 connectors on them, for signals intelligence and powerful software-defined radios for electronic warfare and various communications and control drone detection and deterrence."

SOSA and MOSA also are paying-off for Curtiss-Wright. "Our systems now are SOSA- and MOSA-aligned for multi-mission capabilities, where it can be used for one program and reconfigured for another mode of operation, so can avoid putting in a whole different architectures," says Curtiss-Wright's Frank.

"That drives decisions, and aligns with the MOSA approach. MOSA drives down to SOSA, and seeks truly to use standard interconnects, and use standards effectively across the industry to help drive down the costs of long-term support and technology-refresh costs -- the total costs of ownership."

Artificial intelligence

Of all the potential aerospace and defense applications of future high-performance computing systems, "it's impossible to ignore artificial intelligence," says Chris Ciufo, chief technology officer at General Micro Systems Inc. in Rancho Cucamonga, Calif. "AI is finding its way onto the battlefield, and we have customers asking for systems with artificial intelligence built into the system."

Demand for artificial intelligence is beginning to influence the very architectures of today's most advanced embedded computing systems, Ciufo says. "In yearsOften the most expedient approach for adding AI to embedded computing is to add GPGPU capability to conventional microprocessors and FPGAs. GPGPUs essentially are massive parallel processors that can execute many computing tasks simultaneously.

"Artificial intelligence means now you can take this system with GPGPU and CPU and throw at it computer and point it to a data base of sensor data, use AI algorithms, and you can do what an AI engine does, which is facial recognition, target tracking, object recognition," Ciufo says.

This kind of GPGPU-enabled artificial intelligence capability also should be able to apply AI-based image recognition to a two-dimensional image and infer details in its 3D image. "You now can look at a 2D facial image and infer based on the sculpting of your face what the back of your head looks like," Ciufo says. "Imagine a soldier with a weapon or sensor, and this computer can fill in parts of the picture that you can't see, of what is waiting around the corner. You can infer what the person hiding around the rock can look like."

U.S. military experts essentially are looking at modern machine vision capabilities on the assembly line -- chiefly looking for manufacturing flaws by uncovering details in the image that normally should not be there -- and extending those capability to military operations. "The trend is the U.S. Department of Defense sees what's going on in machine vision in the industrial market, and wants this on the battlefield as a force multiplier," Ciufo says. "Look at the actionable intelligence we can get from our embedded systems, and we can be a much more lethal force. We are seeing this requirement for mission computing with an AI co-processor much more frequently than we used to."

Today's high-performance computing capabilities are making artificial intelligence applications much more feasible than they were decades ago. "I think AI is here to stay this time," says Elma's Littlefield. "Deep learning used to be so computationally intensive that it wasn't really practical with slow processing then. Now people are finding more ways to make use of it."

Among the most promising applications of artificial intelligence in the military involves real-time object detection and recognition, Littlefield says. This could lead to self-protection systems aboard armored combat vehicles that could detect incoming fire, determine the kind of weapon involve, and deploy countermeasures or counter-fire quickly enough to avoid being hit.

In addition, Littlefield says, "The Air Force is talking about huge swarms of autonomous vehicles controlled by single or multiple aircraft." That capability will be built on real-time object detection and recognition.

Real-time embedded computing and artificial intelligence also are influencing future electronic warfare systems, says Bill Conley, chief technology officer at Mercury Systems in Andover, Mass. "With continued research into AI, what gets fielded is the most state-of-the-art algorithms we can come up with."

Conley points to the U.S. Navy Advanced Electronic Warfare (ADVEW) suite for the F/A-18E/F Super Hornet carrier-based jet fighter-bomber for aircraft survivability. "Fourth-generation aircraft must be able to sense what is in their environment, not fly too close to threats, and if they do to protect themselves," Conley says. "Pilots are seeing a lot of different radars, most of which that are not threats, and must find the one that could be a threat. Pilots must sort their way through signals to find the real signals of interest."

This kind of EW challenge is just getting bigger. "It used to be you are looking for a needle in a haystack. Now you are looking for a needle in a stack of needles," Conley says. It relies on automatic change detection, which is becoming very powerful. EW techniques have been developed by humans, and now the machine measures the substantial changes during a mission, and that means a lot more data is being ingested and used in the EW system. Data fusion from many sources sets up what is the critical data that has to flow that can feed the fusion algorithm."

What the future holds

The pressing need for artificial intelligence in future military applications points out how quickly threats are changing. Is the current state of the art in open-systems standards, fast processors, and high-speed networking up to the challenge? The simple answer is no, says Mercury's Conley.

"The systems you start the war with, and the systems you end the war with, often are very different," Conley says. "You need to look at the speed of technology introduction. When you are in the fight, open systems are much less important than it is to bring the best capability into the fight."

It may be that the pace of open-systems standards development, approval, and industry acceptance simply will not be fast enough to meet the demands of future warfare. "How do we introduce innovation at a speed and scale that results in a change of strategic outcome and result," Conley asks. "That is where openness is helpful, but in itself may not be sufficient to fully solve that problem."

About the Author

John Keller

Editor-in-Chief

John Keller is the Editor-in-Chief, Military & Aerospace Electronics Magazine--provides extensive coverage and analysis of enabling electronics and optoelectronic technologies in military, space and commercial aviation applications. John has been a member of the Military & Aerospace Electronics staff since 1989 and chief editor since 1995.