The double-edge sword of advanced computer boards

Advances in single-board computers continue to shrink functionality into ever-smaller spaces in chassis and air transport racks, yet the level of component shrinkage is highlighting other crucial engineering issues, such as power consumption and cooling.

By J.R. Wilson

Gases have no fixed volume or shape; they expand to fill all available space. So do computer applications. As a result, while technology is constantly shrinking the size of increasingly powerful processors and other components, applications are rapidly filling the newly available volume.

What once took a rack has been reduced to a box, a box to a card, and a card to a chip. Several technologies are playing a role in this rapid, ongoing evolution, including such developments as computer on a chip (COC), system on a chip (SOC), system on a module (SOM), switched fabric, blade servers, single-board multi-computers (SBMC), PCI mezzanine cards (PMC), PCI-X, Advanced Telecommunications and Computing Architecture (TCA), VMEbus Switched Serial (VXS), VME Renaissance, and more. Still, the rack remains, now filled with ever-more highly complex and capable components.

"You get more and more functionality on the chip level, the mezzanine level, the board level, the chassis level," says Kishan Jainandunsing, vice president of marketing for PFU Systems (nee Cell Computing) in Santa Clara, Calif. "I don't think you will ever see boards replacing racks, but you will see higher and higher inclinations, with racks containing much more functionality. A good example of that is high-density server blades, which are only possible because of the higher integration you have today, combined with lower power requirements."

PFU officials have become strong proponents of the system-on-a-module concept, which Jainandunsing says can best be compared to macro components. He points to implementations of SOM already in place in avionics, special programs for new fighter jets and bombers, in tanks, automated fire control, portable tactical terminals, submarine power management and navigation controls, and tablet computers.

"Just as you would have system on a chip, you would have multiple board functionality integrated into a single module, that then becomes the macrocomponent to be used in a system," he says. "When we introduced the SOM concept, rather than SBCs designed from scratch, we were looking to specifically satisfy form/fit/function (F3), quality, scalability, extended product life cycle, time to market, and low power. The SOM concept gives gain in time-to-market because, depending on the complexity of the I/O functionality, you have one single design with all the I/O functionality integrated and does not require you to qualify extension modules, which probably would come from multiple vendors, thus significantly increasing the acquisition and test phase."

The SOM also reduces development risk because the designer needs only deal with old I/O signals and not high-speed technology designs. "Scalability plays out nicely because, if you look at an OEM trying to design a product, you have to have some area for future expansion. With SOM, you can more easily scale the performance of the system. It also allows you to continue to innovate on the I/O side," Jainandunsing says.

"If you have embedded components in SOM, with a guaranteed 5-year lifetime, you can easily double that. In the embedded market, everything is custom-made. That means a great deal of the focus of the companies in this market is on customer support. With SOM, we can exceed specifications for ruggedization, such as shock and vibration."

For aircraft designers, the trick is to use these new technologies to get more information to the pilot that he needs to do the job more effectively and more efficiently. At the same time, aircraft designers must deal with changes in electronics that — for relatively old systems — must work within fixed spaces and with available power and cooling.

"The size of the system doesn't change; what changes is what you can do with that space," notes Steve Paavola, chief technology officer at Sky Computers Inc. in Chelmsford, Mass. "Multiprocessor computer systems translate directly in terms of megaflop per cubic foot that we can deliver to the customer. Today we can put a teraflop of computing power into a volume of about 1 cubic meter, compared to about a quarter of that three years ago, with the same power and cooling and even the same number of processors.

"Submarines are an interesting example of changing design, where you can scale the space allowed for the computer based on increased power," Paavola says. "That can mean a dramatic cost savings. Sonar is a good example. Years ago, the only way to solve a sonar problem was with very dense computing technology. Today, they are solved by standard workstation technology."

Motorola's VME Renaissance effort is attracting several different supporters, including Thales Computers of Raleigh, N.C., and VME Microsystems International Corp. (VMIC) of Huntsville, Ala. "Rumors of VME's death have been around for years, but it refuses to die," says VMIC marketing vice president Michael Barnell.

Leaders of Motorola Computer Group in Tempe, Ariz. say the VME Renaissance is not only a life extension for VME, but also is generating new technologies and structures in the process, including PMC and PCI-X.

"Most PMCs are actually geared to I/O, whereas the ones we produce have computing complexes on them," says Jeff Harris, the Motorola group's director of research and system architecture. "There is a standard in the VME International Trade Association (VITA) Standards Organization (VSO) for that and the state-of-the-practice interconnect is 64 bits wide, 66MHz.

"PCI-X is the next generation of PCI, which enables you to go 64 bit wide, 133MHz," Harris says. "The VME Renaissance kicked off a number of different standards efforts, including the PCI-X interconnect, which also is run through VSO and is called VITA 39, for which Motorola is the editor. They are about to begin the balloting process. That will give a much faster interconnect to these submodules, not only for the processor complex PMCs but for the I/O and storage PMCs, as well." PCI-X balloting may be completed in August, he says.

Harris predicts the first VXS product will be on the market by the second half of 2003. The first adopters are expected to be those who need high speed interconnects and a lot of processing power, such as radar, sonar, imaging, medical imaging, and semiconductor processing equipment involving lithography.

Harris says processor PMCs offer systems designers a modular way to add computing capability to a system simply by snapping it onto a VME or CompactPCI card to increase capability for prototyping to build a highly extensible system.

"There are some interesting things in development," he adds. "We are working on mezzanine cards and submodules that have point-to-point interconnects. The PCI bus is a parallel multidrop bus, which was good for its day but is starting to bump into the law of physics in terms of the signal integrity. In order to get increased performance, the next generation of submodule interconnect will be some kind of point-to-point connect rather than multidrop. These are what we have on the drawing board for the mezzanine cards and possible new form factors that might come to light because we have point-to-point interconnect. Those may be form factors even smaller than a mezzanine card or a PC-104 card."

High-speed switched point-to-point interconnects are serial links that run as fast as 10 gigabits per second on each link. Four can bond together for a 4x or 40 gigabit-per-second link.

Advanced TCA is a new form factor. Its creator and sponsor is the same industry group that created CompactPCI, although is not compatible with CompactPCI because TCA is much wider, deeper, and taller than is CompactPCI, which allows for more components on each card.

"The consequence of having a much larger card is you have to start deriving modularity in a different way," Harris explains. "Imagine a vertical blade so large it is almost another motherboard. So I think you're going to see a lot of new submodules to plug into these new form factors — and those will be switched point-to-point and probably hot swappable and managed entities. Normally, if you are doing systems or network management on a chassis, you can take a specific card out of service, address the blade, and tell it to reboot itself. Now you will be able to do that kind of management on a submodule basis because these submodules will be pretty intelligent.

"With all these submodules fitting on the blade, it will become a blade server," Harris continues. "This will create a much more open and flexible architecture, where you can combine different submodules to bring different capabilities together to customize their solution. For example, right now if you buy a CompactPCI or VME card, you get a certain functionality. But if you have a form factor three times the size of one of those, that's a lot of circuitry, so in order to not make them so narrowly focused that they are single-purpose cards, you will see combinations of submodules to personalize these large form factors, make them more flexible."

He predicts an increasing amount of enterprise computing scenarios will go to vertically slotted backplanes rather than the traditional "pizza box" approach, moving the enterprise closet from horizontal to vertically slotted server blades. That also will make it denser.

Now the evolving technologies begin combining and clashing — with Moore's Law continuing to see computing power increase at a rapid pace as circuitry continues to shrink and form factors go in the opposite direction, growing larger and larger with more and more circuitry. It is that trend that is driving the development of submodules.

"The end result is we have large form factors that can draw more power and have better cooling, so they can address enterprise-type computing and storage scenarios," Harris says. "And in order to make them modular and flexible, we have new submodules that have point-to-point interconnects, are hot swappable, and are managed entities.

The dawn of VXSHowever, these new form factors are incompatible with relatively old systems, thus bringing into play Motorola's VXS or VITA 41 effort."VXS essentially brings switched point-to-point interconnects to the VME backplane, where they can coexist with the VME multidrop bus," Harris explains. "The advantage of that is you are able to move to switched point-to-point interconnect on a VME backplane while keeping all your legacy equipment interoperable with the new technology.

"To my knowledge, VXS is the only solution that allows high-speed switched point-to-point interconnects that does not require a new form factor," Harris continues. "Advanced TCA requires a new form factor to bring switched point-to-point interconnects to reality, as does VITA 34 or APS [advanced packaging system]. The problem with that is you have to abandon all your legacy. VXS keeps all your legacy and adds the capability of switched point-to-point interconnects to the backplane."

The purpose of the VME Renaissance is to infuse new technologies into VME to keep it an attractive place for new designs for at least another 20 years, maintaining the last 20 years' history of maintaining backward compatibility even with new technology. And to allow new applications that push the edge, such as building signal processing on VME while accommodating all legacy applications and equipment.

"There are a bunch of technological innovations we are rolling into VME," Harris says. "First, we are making the parallel multidrop bus faster by implementing 2eSST [two-edged source synchronous transfer], which changes the transfer protocol from VME 64x, which is asynchronous, by removing the acknowledgment handshake and uses a DDR [double data rate] technique, transmitting two bits per clock cycle, which allows about an eight times performance increase in the parallel bus transfer rate. Beyond that is bringing the point-to-pint interconnect on the backplane down to each and every VME daughter card, which will give us point to-point-interconnect between the submodules on an individual daughter card.

"The VME Renaissance brings focus back to VME and by infusing all this new technology, I think we will see additional designs being placed on VME," Harris says. "And with the innovation of VXS, I think you will see VME moving into the enterprise space in terms of compute blades and storage space. VME will have a strong, wide audience, which is good for the users of the product."

While pumping new life into VME, these new technologies also have been part of an expansion of options available to designers, such as the Thales effort to create designs that depopulate the VME bus interface and use it for embedded controllers. For instance, designing a blade server with a VCE405 as a base and using the PMC card as the expansion without using the VME bus.

The VCE405 board uses the Thales ASIC technology, which is actually a follow-on to the IBM Alma PCI-VME bus bridge chip and a competitor to the universe of VIC64 (VMEbus interface controller).

"The VCE405 was designed to be a low-cost expansion board capable of handling various forms of I/O and deliberately designed to be a cost-effective solution and expand the functionality of the system, if not necessarily the most powerful card you can slide into a system," says Thales engineering vice president George Schreck. "We were clearly aiming for a target of best-price performance while still providing best functionality. It's new to the market and we're targeting a number of airborne applications. The board is extremely low weight and, because of its low power consumption, we were able to build it without massive heat sinks. We're also looking at applications that are ground mobile and will see high shock and high vibration" such as main battle tanks, self-propelled artillery, and infantry fighting vehicles.

Schreck says officials of the French Ministry of Defense has ordered the board for applications they are not revealing, but the U.S. customers they have so far have been somewhat of a surprise. Most of the initial sales have been to commercial accounts, for such applications as down-hole communications for oil wells and as a trackside controller on a light rail project.

Duncan Young, marketing director at Dy 4 Systems Inc. in Kanata, Ontario, says boards have and will continue to survive the ongoing technology shrinkage by being reinvented to suit customer needs. Dy 4 became a business unit of Force Computers in January.

"There is an argument that you could shrink a lot of the functionality down to a single chip, but there is still innovation that goes into SBC and still differentiate between various product vendors," Young says. "That is particularly true of the military. So you could argue that a processing system could be shrunk down as low as it can go but there will always be the extra bits and pieces that differentiate one vendor's board from another and customers will always want to capitalize on those differences, whether it is adding graphics or different buses or whatever on the board."

What will change — although perhaps not in the next five years or so — is the form factor, Young predicts. Designers, he says, will turn increasingly to switched fabrics to deal with the power concerns that come with ever-higher levels of integration.

"If we go to switched fabric, why do we need to stick with existing form factors? If you go to SBCs connected through switched fabrics, you have a whole new way of thinking," Young says. "The company that can think of the better way of designing the mousetrap will be the one that wins," he says. "I wish we could foretell the future of switched fabrics. There are way too many choices now and not all of them are actually viable. Many of the proposed switched fabrics don't actually exist today in silicon — they're tomorrow's technologies."

While Dy 4 has chosen to go with StarFabric from StarGen of Marlborough, Mass., for now, Young acknowledges it has more than 60 competitors, none of which has a secure market position. He predicts only two or three will survive the eventual shakeout — but no one can predict which ones.

"It's almost like switched fabrics in the embedded aerospace marketplace are still looking for a real killer application," he says. "But those will come along, in two or three years — probably in DSP applications, where they need the large bandwidth to process the incoming information."

The growth of SBC capabilities also is forcing some companies that previously ignored the technology to change position, as product marketing director Richard Jaenicke says is the case with Mercury Computer Systems Inc. in Chelmsford, Mass.

"With these faster processors, multicomputing is moving down to a board or two. But you still want all the connectivity and high performance you would find in a high-end multicomputer, so you need multiple processors on a single board connected by a switched fabric as well as connections between boards. You also need software that can communicate effectively, with high throughput, through those processors and access high performance hardware," he says.

"To keep the same performance levels, the drivers for the design of the system — although it may still be as large — change dramatically," Jaenicke says. "So whether you are talking about the packaging or the communications between the various parts, the requirements are different. If you think about the PCI bus, which was invented as a peripheral bus, and the idea that all peripherals will soon be incorporated onto the chip, what kind of communications do you need off the chip? Obviously, a system interconnect, which is not the same requirement as previously existed for a peripheral connection."

The end result, he continues, is filling the same size box back up with entirely new and different components, including different kinds of connections between chips within the box than were previously used.

"You also have to think differently about the packaging, because the integrated chips may be higher power and produce more heat, so power becomes more of a point source and you have to consider more heat spreading," Jaenicke says. "Cooling under military conditions is a challenging issue in the first place and becomes even more so as you concentrate more chips into a smaller area."

The role of blade serversAnother area in which all of the new technologies are coming to a head is the blade server, which is essentially a thin, modular electronic circuit board, usually with multiple microprocessors and onboard operating system and, frequently, built-in application. Individual blade servers are usually hot pluggable and come in various heights, including 3U, 1U and even "sub-U" (1U equals 1.75 inches). Several blade servers, which share a common high-speed bus, can be installed together and are designed to create less heat while compacting capabilities into a smaller space."It has a pretty bright future," says Mark Patelle, marketing vice president for Sky Computers. "There are a lot of new technologies and product innovation coming along that will fuel the growth of the blade server market, including standards for switched backplanes and switched fabrics. The open-architecture blade server will drive the popularity, just as Unix took over 20 years ago in the open architecture workstation arena.

"Sky has successfully implemented systems with hundreds of CPUs for special class problems, from tornado prediction to military applications, including very-high-resolution machine inspection," Patelle says. "But that isn't the sweet spot of our market going forward. The shrinkage and increased power of components will let us build racks with more CPUs, not necessarily measured by raw gigaflops, but in terms of resilient architecture, scalability, manageability, and productivity that can be achieved from a developer perspective. Raw power will remain one criteria in marketing computers, but other elements are going to be just as important."

Jaenicke says he agrees, in some respects, but sees only marginal potential for blades in the military.

"It doesn't seem like a good environment for most of those. I certainly don't see a lot of applications in a fighter jet, for example," he says. "It may be the nature of the military systems with which we are involved — highly mobile, somewhat-harsh-environment platforms," Jaenicke says. "More benign environments, even in the military, can take the power and space of blades as opposed to a true embedded solution. But, generally, the signal and image processing computers Mercury develops are not used in benign environments. Our applications don't require lots of disks; we usually require a reasonable amount of memory, but we're talking a gigabyte or a few gigs, not terabytes, which may be more blade-oriented."

Mezzanine cardsMiniaturization and more integration also are increasing the role of mezzanine boards, further compounding the heat issue and the need to restructure packaging."Some of the board form factors are running up against their limits," Jaenicke says. "Some are proposing mild changes, such as VME becoming VXS, while others are looking at PICMG (PCI Industrial Computer Manufacturers Group), which is wildly different from the CompactPCI (which is 3u to 6u, very similar to VME), while PICMG, responding to the telecom industry's requirements, is closer to a 9u board — a much bigger board. The spacing between the boards also is greater. Those are drastically different form factors for the board, which is being done to compensate for hotter components. They are actually going to bigger boards as a typical solution."

All of the form factors seem poised for a change as the industry enters a transition period. While that unfolds, however, it becomes more difficult for applications developers or system integrators to standardize on any one specific open standard, not only because there are competing standards, but because almost every standard is switching to its own next generation.

"That brings us back to the mezzanine, which we see playing an increased role in standards interoperability of systems in the future," Jaenicke says. "One thing that is constant in all these different options is that almost all have a definition for being able to incorporate a standard PMC mezzanine card. Because of the shrinking of the more highly integrated components, the functionality you can fit on a PMC is equivalent to what used to take a whole board. Almost every I/O interface can fit on a PMC, as can ASICs. So a lot of products will migrate to a standard interface at the mezzanine level. At that point, it doesn't matter which of the standard boards you choose or even if the boards are standard."

Mercury officials say they believe that process is inevitable and have begun incorporating it into the company's standard product line. One such is the single-board multicomputer, which combines multiprocessor and SBC functionality with several different chips for handling signals and image processing. That may involve several G-4 processors connected by a switched fabric — along with a separate I/O processor acting as a single-board host with integrated I/O, such as Ethernet directly into the chip. It also controls the I/O coming through the mezzanine. Both the PMC site and the host processor also connect into the switched fabric, but are full members of the parallel processing system.

"This can be used as a stand-alone board or multiple ones can be connected together with the same switched fabric between boards," Jaenicke says. "Integration drives the combination of single and multiboard computers and the power consumption of these integrated devices drives new packaging options that lend themselves to standardizing more on mezzanine cards that can grow across multiple generations of platforms as well as computing types of platforms."

Open-architecture blade serversThales officials say they believe the future for open-architecture blade servers is strong — if not immediate — for an old reason."We need lower cost, and greater expandability," Scheck says. "One thing that will promote that architecture idea is some type of better networking capability. We're looking at offering a 19-inch rack-mountable unit with a processor board, fans, and I/O expansion that will be driven by the VME405. The idea there is to get an inexpensive unit with a lot of horsepower.

"When we set our strategic direction, we had not included telecom blade servers as a target area," Scheck continues. "We were looking at defense and aerospace, qualifying that with rugged extended-temperature products, primarily based on VME but allowing for other modules. So blade servers are interesting to us because we see the potential for the future, but they don't fit very well into our short-term strategies."

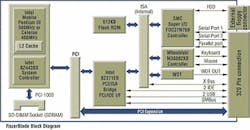

At the core of Thales Computers' VCE405 is the 375 MIPS/266MHz IBM PowerPC 405GP, a 32-bit RISC embedded controller featuring typical power consumption of 1.5 watts and two PMC slots for maximum I/O flexibility. Thales officials say that, coupled with the high-performance ALMA2e PCI-64/VME bridge, makes the 6U VCE405 well suited for critical environments such as aerospace systems and medical equipment, where high-compute power and low power consumption and dissipation are a concern.

While some in the industry see the PCI bus as having reached the end of the road in terms of new design requirements, PFU's Jainandunsing disagrees — for now.

The end of PCI?"The PCI bus is essentially a 32-bit bus at 33MHz," Jainandunsing says. "Certain applications are starting to look at the feasibility for a 64-bit, 66MHz bus, which would provide four times more throughput. That is still pretty much a niche case in military technology, but when you look at intensive image processing applications, remote sensing and such, they are looking for a much wider bus capability," he says."But PCI will continue for the next 10 years to be very strong," Jainandunsing continues. "There are several new initiatives for such things as 3GIO (third generation I/O interconnect), which recently was baptized as PCI Express. It is more of a point-to-point infrastructure than a bus infrastructure and is serial differential rather than parallel non-differential. 3GIO will, toward the eighth year in its cycle, start to more rapidly replace the PCI bus. That would be around 2010."

The potential trend is for increased processing power in embedded applications, requiring a matching throughput on buses that moves designers away from PCI to a wider bus and subsequently to PCI Express to get an even better match between the I/O and CPU bandwidth. That also follows the trail of memory bandwidth, which has evolved from EDO to PC66 to PC100 to PC133 to DDR.

"For servers, to get a long view, the industry is looking at making computing a utility, like gas or electricity — the buzzword there is grid computing. In that scenario, the users get access to computing the same way they would get access now to gas or water," Jainandunsing says.

"That requires massive amounts of computing resources across the grid. But the time is right for that with the phenomenon of low power/high density, so you can put 32 servers in a 3U 19-inch-rack form factor at a fairly low power. Most don't need a Xeon-type processor because lower power CPUs can collaborate on a single task in larger quantities. The load can be dynamically configured on these PCUs as the number of users increase or decreases."

For the military in particular and aerospace in general, all of this means an intensification of the effort to switch commercially developed technologies to more rugged environments for which they really were never designed. In short, the decade-old love/hate relationship with COTS will only grow.

"It is a classic issue for commercial products to have a lifecycle of at most three years, then they are thrown away. But a military product may go on the shelf for 20 years before being used and has to remain useful for the entire lifecycle of the platform," notes Sky Computers's Paavola. "Another question is how long does it take to get from design to deployment. You may have a six-year development cycle on the platform and the COTS technology it is based on has gone through three or four generations. A number of programs are trying to design the development program so when they get to production, they can do a technology insertion and go with the technology that is current at that time."

One commercial product making ever-growing inroads into military applications is Windows NT, although some in industry predict an embedded version of Windows XP may be the next big development.

"There is a trend toward a broader adoption of Intel-based single board computers. The performance of those products is becoming comparable to a PowerPC platform and developers have a strong familiarity with them and there is a wider range of tools to choose from, although PowerPC remains a big piece of the market," says VMIC's Barnell. "You can get a lot of horsepower and processing power even from a Pentium 3 or Celeron processor with 1.3GHz and 1GB of memory. There is a much stronger move toward Windows now than a few years ago, in part because of some COTS initiatives made by the Navy, which is using a lot more Windows-based systems than before."

As all of these new technologies impact the design of new and retrofit systems, the basic rule of thumb remains intact: The size of the rack defines the footprint of the system, but companies and military programs are just packing more into that same footprint, sometimes moving more functionality into one rack that once resided on multiple racks. In the end, miniaturization will take place within the components, not within the footprint, which will boast a lot more functionality.

"Customers will always want more performance," says Dy 4's Young. "Whatever size of processor you give them today, they will always find a need for more. Most SBCs today have a single PowerPC on them, but the trend is to have two or more — any number of PowerPCs — and vast amounts of memory — and customers will design their applications to fit. If the technology were to stand still today, you could implement today's SBC on a chip in a couple of years time, but the need is constantly moving forward; the clock doesn't stand still."

High-tech silicon, low-tech concreteAs boxes, boards, cards, and chips continue to shrink in size but increase in computing capability, several different new concerns must be taken into consideration by designers, especially those dealing with retrofitting old platforms.Shrinking the size and weight of the computer component in an already tightly packed fighter jet or helicopter might seem an obvious advantage. And for those still on the drawing boards, that is true. For relatively old platforms, however, this shrinkage can create serious problems with the aircraft's center of gravity (CG), which was designed around components that are older, larger, and heavier than comparable parts are today.

"There are planes flying around with lumps of concrete in them to recreate the CG after shrinking computing components," says Duncan Young, marketing director at Dy 4 Systems in Kanata, Ontario. "You also have heat problems. Those aircraft were designed for a certain level of heat dissipation; if you change that, you run into more problems because you don't want to have to redesign the cooling system. However, processors, memory, and graphics are all pretty much commercial technology, so you have no choice.

"There are natural breaks where you just have to accept that a change is necessary," Young goes on. "In the embedded world, it may well be a mix of hardware and software. In that environment, you only have a few OS suppliers, like Wind River [of Alameda, Calif.] and Green Hills [of Santa Barbara, Calif.], which pretty well track the hardware developments from Intel and Motorola and develop their products in tandem. The customer, however, has to worry about the application layer, not the hardware and OS underneath it."

Vital to those concerns not only is adopting an open architecture — currently such as VME or CompactPCI — for customers with long-term requirements, but also making certain that any new generation of cards is backward compatible with previous generations. While the board designer must worry about power, I/O, and the integration path from one processor to the next, the customers must try to use software to extricate themselves from the problems of individual processors.

Of course, the answer to CG isn't always concrete; especially on larger platforms, the solution is often more concrete in terms of increased safety, situational awareness, and aircraft mission, explains Mike Valentino, senior manager for advanced computer applications at Lockheed Martin Systems Integration-Owego in Owego, N.Y.

"Where we used to use one black box for flight manager, one for armament control, one for display processing, sensor processing, general-purpose mission processor, etc., now we integrate all that into an integrated display and mission processor in a single box. That is a significant savings in space and power, logistical cost, and improved reliability," he says.

"We've worked Navy programs where we've saved enough space and power and weight with systems integration that they have to compensate for the loss of weight. That typically means they will add more function," Valentino continues. "On the B-1B, we took a box that used to have 17 or 18 cards in it and probably used 400 to 500 watts. Our replacement box, which is the exact same form factor, has three cards, probably four to five times the processing capability, and 10 to 12 open VME slots for future growth." Future B-1B avionics upgrades will include digital map storage and software that will enable crewmembers to rewrite the mission plan while it is happening, he says.

For Valentino, the concern is not so much for CG as it is for power, heat, and vulnerability, all of which intensify as systems shrink.

"At the processor design level, we could fit the whole processor on a single chip one inch square, which means I now have 10 to 30 watts in a single square inch where I used to have it across 17 pages. So you now have very concentrated power in very small places, which brings unique engineering challenges. Also, in that single inch square, what used to be digital processing, as far as the EMI [electromagnetic interference] and EMC [electromagnetic compatibility] guys are concerned, it is now RF, so you have new and unique shielding requirements.

"Our environmental people are very creative," Valentino says. "There are two basic approaches. You find a way to specifically cool that square inch, say convection cooling. At 75,000 feet there isn't much air to move around, so fans don't work; we have to find other ways, such as attaching a special metal. Another approach is what the environmental guys call the 'beer cooler'. You take less rugged cards, isolate them from the environment, add refrigeration, shock absorption, etc."

That approach will not work on helicopters or jet fighters, where space is limited, but has been used on larger platforms, such as the Airborne Warning and Control System aircraft, better known as AWACS.

Packaging the new generation of smaller, more powerful chips also is a problem at the very base level, especially when attempting to upgrade from one generation to the next without changing the motherboard.

"Typically, commercial processors — Pentium, PowerPC, whatever — are plastic components, ball grid arrays [solder balls on the bottom attaching to the motherboard]. The coefficient of expansion of the board you attach it to can be different, but the aircraft are still going over the full military environment range, so the solder ball may give if it is different than before, meaning your long-term reliability suffers greatly," Valentino says.

"We spend a great deal of time and energy finding a way to package commercial chips on a ball to be rugged. On the B-1, B-2, Space Shuttle — we're talking 30 to 50 years of operation. We can get commercial boards and get the functionality we need, but in five years they don't work anymore because they can't withstand the environment. So we have to find a way to extend that lifecycle."

Another issue facing the shrinking processor and system is radiation for high-altitude aircraft and spacecraft.

"The Space Shuttle computer flying today has an array of error-correcting memories and single-event-upset detectors," Valentino says. "One of the things we're going to start seeing, as we shrink these processors more and more, are the core voltages inside the processors go down and particle upsets go up because you have less margin if a particle of some energy slams into the chip."