Today’s rugged chassis designs offer fast throughput, ruggedization, advanced cooling, adherence to emerging industry standards, and options for optical fiber interconnects.

Rugged embedded computing backplanes and chassis for military and aerospace applications are helping lead the aerospace and defense industry into a new era of high-speed data throughput, standard interoperable architectures, and thermal management to tame even the most powerful of today’s server-grade microprocessors.

Perhaps at the forefront of these technology trends are backplane data bandwidths that are expected to yield throughput of 100 gigabits per second within the next couple of years, as well as hybrid electronics cooling technologies that blend conduction cooling, convection cooling, and liquid cooling. Traditional copper interconnects are expected to yield their leadership to optical fiber as data speeds increase, and emerging industry standards should offer an attractive balance of performance, cost, and maintainability.

Suffice it to say that today’s backplanes and chassis are not your father’s embedded computing. Technology has come a long way since the heyday of VME systems, and is evolving rapidly to pave the way to future military and aerospace uses of artificial intelligence (AI) machine learning, sensor fusion, and advanced command and control.

The Aitech rugged 3U VPX C877 single-board computer combines the a 12-core Intel Xeon processor and as much as 1 terabyte of on-board security-protected SATA solid-state drive.

Data throughput

One of the most exciting developments in embedded computing backplanes and chassis is a path towards widespread use of 100 Gigabit Ethernet copper and optical interconnects at the backplane.

“The big sea-change is people in the past were happy to move data at 10 gigabits per second, but today people are pushing the edge of the envelope,” says David Pepper, senior product manager at embedded computing specialist Abaco Systems in Huntsville, Ala. “In just a very few years, 40 Gigabit Ethernet came on the scene, and now 100 Gigabit Ethernet is available. For us today it is typical to do 10- and 40-Gigabit Ethernet, but we need to be ready for 100-Gigabit tomorrow.”

These faster speeds among embedded computing boards and boxes could lead to new implementations of heterogeneous computing that blends general-purpose processors, field-programmable gate arrays (FPGAs), and general-purpose graphics processing units (GPGPUs) for AI, machine learning, vision systems, cyber security, and smart data storage.

“We’re looking at making everything faster with the switch fabrics, and to move at the rate of technology itself,” says John Bratton, product and solutions marketing manager at Mercury Systems in Andover, Mass. “The world is moving faster than it ever has before; machine autonomy and AI are moving into edge processing to accommodate more sensors, sensor fusion, and a deluge of sensor information to make intelligent, real-time assessments of the environment.”

Several companies already have announced embedded computing products that involve 100-Gigabit Ethernet interconnects — among them the Curtiss-Wright Corp. Defense Solutions Division in Ashburn, Va. “Pushing VPX to higher and higher transmission speeds to get more capability is a clear backplane trend,” says Ivan Straznicky, chief technology officer at Curtiss-Wright. “We announced a 100-gigabit VPX product in January 2018.”

Enhanced data throughput especially is crucial for leading-edge aerospace and defense applications like radar and electronic warfare signal processing, Straznicky says. “We have a clear demand from customers for those applications and those speeds. With more processing comes memory bandwidth and communications among the processors, and switching between the systems.”

Today the majority of high-performance embedded computing (HPEC) applications are using interconnects that run at 10 to 40 gigabits per second, “but in the next two or three years you will see products coming out at 100 gigabits, and that technology could become mainstream in the next five years,” Straznicky.

Not every embedded computing application will need 100-gigabit throughput, Straznicky cautions. “Mission and flight computers, for example, really don’t need it yet,” he says. Only the most demanding applications, like signal processing for radar, EW, and signals intelligence will be the pioneering applications for 100-Gigabit Ethernet.”

The VITA 48.4 Chassis from Elma Electronic offers liquid flow through cooling and has four 6U OpenVPX (VITA 65) slots.

Signal integrity

Increasing signal throughput in today’s high-performance embedded computing systems comes at a price, however. Perhaps chief among these design tradeoffs is compromised signal integrity; as speeds increase, the problem becomes worse.

“Signals get faster all the time,” says Chris Ciufo, chief technology officer at General Micro Systems (GMS) in Rancho Cucamonga, Calif. “It’s very difficult to design these systems, because we have to talk about signal integrity.” Anything running in the gigahertz range over copper interconnects transmits electronic noise, for which systems designers must compensate, Ciufo explains.

“Every time there is a discontinuity in the system — from a connector, cable, circuit card via — it is like an antenna stub,” Ciufo says. “It affects and can degrade the signal. You have to worry about crosstalk and jitter; add up all those discontinuities, and it is very difficult to design these systems that run a really fast speeds.”

Interconnect companies like TE Connectivity in Harrisburg, Va., are keeping pace with technology by providing leading-edge connectors to minimize noise, crosstalk, and jitter in high-performance embedded computing. “The TE connector can run at 40 GHz, and they are talking about running it up to 100 GHz,” Ciufo says.

The problem, however, isn’t just the responsibility of the interconnect suppliers; it’s up to every systems designer to minimize signal integrity issues throughout their systems. “It’s really incumbent on the designers of all the bits and pieces of these systems to safeguard signal integrity issues,” Ciufo says. “With each jump in speed it gets more difficult. They also have to worry about the power supply signals; it isn’t easy. With each new generation of technology, the dial is turned up just a little bit more, so we have to keep upping our game.”

Thermal management and ruggedization

It’s a rule of thumb in embedded computing that higher performance and faster speeds mean increased waste heat. Fortunately, systems designers today have a wide and growing set of open-systems standards to choose from to help keep their systems cool and operating at peak efficiency.

“On the chassis we are seeing two effects: one, a lot of people want to put a lot of these circuit cards that are getting hotter and hotter inside an ATR [air transport rack standard chassis],” says Justin Moll, vice president of U.S. market development at rugged embedded chassis provider Pixus Technologies in Waterloo, Ontario. “In the past you might see six slots as the average size. Now people want to put in as many as 12 OpenVPX cards, and get 800 Watts into an ATR. People are trying to put more and more power on cards in less space, and that also means hotter cards.”

It can be difficult to do this while reducing system size, weight, and power consumption (SWaP). “It’s a challenge for us chassis guys to prevent all the enclosures from getting bigger and bigger because of the cooling they need,” Moll says.

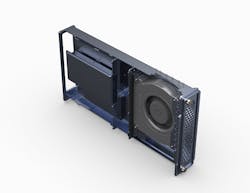

The Pixus ATR with heat exchange is designed for 3U OpenVPX boards, and can cool as much as 800 Watts. The inner chamber is sealed, with an outer shell to pull supplemental cooling air over the enclosure fins.

Pixus is designing rugged OpenVPX embedded computing chassis with heat exchangers on the outside to cool by pulling outside air across the outside edges. “The outer shell pulls air from the outside for extra cooling, while inside it is sealed and protected from the environment,” Moll says.

This kind of cooling approach that involves blown air can be a necessity, and not a luxury, in today’s powerful embedded computing designs. “People are more willing to use some of the air-cooled systems, and in rugged rack-mount applications there are a lot more of those,” Moll says. “The overall trend is keeping the air-cooled approach because they are getting beyond the limits of what traditional conduction cooling can do.”

Curtiss-Wright’s Straznicky says systems designers are making broader use of industry standards like VITA 48.8 air-flow-through and VITA 48.4 liquid-flow-through to help tackle heat in high-performance systems.

VITA 48.8 permits air inlets at both card edges, as well as on the top circuit card edge opposite the VPX connectors. It also can promote use of polymer or composite materials to reduce chassis size and weight.

VITA 48.8 seeks to improve the efficiency of the thermal path to cool high-performance processors, FPGAs, general-purpose graphics processing units (GPGPUs), and other hot components. Specifications for ANSI/VITA 48.8 use gasketing to prevent particulate contamination from the moving air.

When designers need even more cooling capacity, they can turn to VITA 48.3 liquid flow by and 48.4 liquid flow through technology to keep cards sealed from outside contaminants, while capitalizing on the strong cooling properties of liquid to cool embedded electronics that is generating even 800 or 900 Watts per slot.

Curtiss-Wright also is offering hybrid thermal management solutions that blend air flow through and liquid flow through technologies to cool embedded computing systems with widely ranging waste heat. “We have a hybrid approach that allows the designer to use conduction-cooled modules to optimize,” Straznicky says.

Copper vs. optical fiber interconnects

Most embedded computing industry experts are waiting for as long as they can before making the transition from copper to optical fiber interconnects — although few deny that an eventual move to optical fiber is a virtual certainty.

Many industry old timers are amazed that copper interconnects today are capable of speeds as fast as 100 gigabits per second, whereas only a few years ago few thought that copper could carry signals much faster than 1 gigabit per second.

Fortunately, however, industry standards are evolving such that designers probably won’t be forced into making a choice anytime soon. Standard architectures are allowing hybrid approaches that can mix and match copper RF signals and optical signals over fiber on the same OpenVPX backplane.

Optical fiber interconnects offer obvious advantages over copper — faster speeds, lower system noise, better signal integrity, and enhanced security. Still, the transition can be difficult because of the expense, new technologies necessary, and the difficulty of keeping fiber interconnects clean enough for maximum signal throughput. “Fiber weighs less than copper, you can’t eavesdrop on it, and it can go long distances,” points out Mercury’s Bratton. “It also requires precision alignment, and cleanliness is an issue.”

FiberQA in Old Lyme, Conn., offers AVIT-DT technology that uses a system of robotics and automated software to inspect and clean dozens of optical fiber ferrules in a matter of minutes, rather than hours or days. While the AVIT-DT is big enough to take polishing plates, several military-standard circular connectors, or dozens of MPO ferrules, it is compact enough to fit on a workstation. It can help inspect individual ferrules or different sized military and aerospace shell connectors at the same time.

The Pentek plenum has an exposed wall that helps control the cooling airflow in Pentek high-performance data recorders.

Optical-to-electronic-conversion, and electronic-to-optical-conversion, also is an issue for today’s embedded computing designs. “Optical-to-electronic and electronic-to-optical converters are crucial enabling technologies,” says Curtiss-Wright’s Straznicky. “In rugged transceivers, I don’t know if there are any on the market now, but once there is a clear need, then companies will go ahead and innovate.”

Companies to watch on future Optical-to-electronic-conversion, and electronic-to-optical-conversion chips include Finisar Corp. in Sunnyvale, Calif.; Reflex Photonics in Kirkland, Quebec; and Ultra Communications Inc. in Vista, Calif.

Optical fiber offers more advantages than just signal throughput; it also helps data storage systems keep up with today’s fast microprocessors. Non-Volatile Memory Express (NVMe) solid-state drives can communicate with the CPUs at PCI Express Gen3 speeds, which alleviates the need for drive controllers that slow data throughput and add unnecessary controller latency.

NVMe over fiber is a trend,” says GMS’s Ciufo. “To scale-up to more storage, we are looking for ways to connect more boxes with fiber with new protocols. Fiber is very light weight, immune to EMI [electromagnetic interference], is inherently not tapable, and gives you tremendous speeds with low SWaP.”

Despite its advantages, many companies still are reluctant to move to optical fiber. “No one will do optical until they have to,” says Abaco’s Pepper. “But when you get beyond 100-gigabit interconnects, we’ll have to get serious about these optical standards coming on.” Echoes Michael Munroe, backplane product specialist at Elma Electronic, “People will go to fiber when the have to, but there will be an awful lot of copper for RF and power. There always will be a mix.”

Emerging industry standards

One of today’s most talked-about emerging industry standards influencing embedded computing backplanes and chassis is the Sensor Open Systems Architecture (SOSA) standard, administered by The Open Group in San Francisco.

It revolves around OpenVPX, and focuses on single-board computers and how to integrate them into sensor platforms. Embedded computing experts also say SOSA functionally is boiling-down cumbersome OpenVPX standards into a useful subset for aerospace and defense applications. SOSA’s potential to streamline OpenVPX could bolster interoperability of third-party modules in military and aerospace systems, and help save costs and enhance competition.

The Apex rugged computer server for military and aerospace applications from General Micro Systems is designed to evolve and upgrade as system needs change over several years.

SOSA falls under an umbrella of emerging standards called Modular Open Systems Approach (MOSA). In addition to SOSA includes Future Airborne Capability Environment (FACE); Vehicular Integration for C4ISR/EW Interoperability (VICTORY); and Open Mission Systems/Universal Command and Control Interface (OMS/UCI).

Military involvement in MOSA and SOSA standards is helping keep OpenVPX attractive to the military. Although SOSA is not yet a final standard, it eventually may help forge consensus among suppliers and users.

“SOSA is a big deal,” says Mercury’s Bratton. “It’s really got traction, and we are seeing a lot of technology re-use, upgradeability, removing vendor-lock, and scalability.”

Kontron America Inc. in San Diego has introduced the VX305C-40G 3U OpenVPX single-board computer that uses an open-systems architecture that aligns with SOSA. The embedded computer for battlefield server-class computing and digital signal processing (DSP uses a defined OpenVPX single-board computer profile that marries a 40 Gigabit Ethernet port and user I/O to the 12-core version of the Intel Xeon D processor.

Close behind Kontron in introducing SOSA products is Pentek Inc. in Upper Saddle River, N.J., with the model 71813 SOSA-aligned LVDS XMC module with optical I/O. It is based on the Xilinx Kintex Ultrascale field-programmable gate array (FPGA) and features 28 pairs of LVDS digital I/O to align with the emerging SOSA standard. The model 71813 also implements an optional front panel optical interface supporting four 12-gigabit-per-second lanes to the FPGA.

Distributed architectures

A growing number of military embedded computing applications are dispensing entirely with the traditional circuit-card-and-backplane architecture in favor of distributing networked stand-alone computer boxes throughout their systems.

“There is a migration in the customer base toward small-form-factor computer systems,” says Doug Patterson, vice president of global marketing at Aitech Defense Systems Inc. in Chatsworth, Calif. “They want distributed intelligence and a way to communicate that back to the main mission computer, which is backplane-and-chassis-based.

Distributed systems move signal processing and data conversion as closely to sensors and antennas as possible, and perform as much processing and data-reduction as possible. Then it flows back to a centralized computer system. “It’s taking a lot of intelligence and pushing that out to the sensor,” Patterson says. “Instead of flowing the data through a harness, it goes out to the edge to the sensors.”

Driving the move to distributed architectures are SWaP and price, Patterson says. “It’s moving smaller compute clusters out closer to the sensors, and passing that data along to the main mission processors.”

Will distributed architectures replace traditional bus-and-board boxes? Probably not in the foreseeable future, Patterson says. “All that data has to go somewhere,” he says. “Payloads typically form a ring of boxes around the vehicle, which are booster controllers, GPS, and navigation systems. All that data has to reside in a box of some form. It may change in the future, but for now you will have a main mission computer.”

Company List

Abaco Systems Inc.

Huntsville, Ala.

www.abaco.com

Aitech Defense Systems Inc.

Chatsworth, Calif.

www.rugged.com

Atrenne Integrated Solutions Inc.

Littleton, Mass.

www.atrenne.com

Crystal Group Inc.

Hiawatha, Iowa

www.crystalrugged.com

Curtiss-Wright Defense Solutions

Ashburn, Va.

www.curtisswrightds.com

Elma Electronic Inc.

Fremont, Calif.

www.elma.com

Extreme Engineering Solutions (X-ES)

Verona, Wis.

www.xes-inc.com

FiberQA

Old Lyme, Conn.

https://www.fiberqa.com

Finisar Corp.

Sunnyvale, Calif.

www.finisar.com

General Micro Systems (GMS) Inc.

Rancho Cucamonga, Calif.

www.gms4sbc.com

Kontron America Inc.

San Diego

www.kontron.com

Mercury Systems Inc.

Andover, Mass.

www.mrcy.com

Pentek Inc.

Upper Saddle River, N.J.

www.pentek.com

Pixus Technologies

Waterloo, Ontario

www.pixustechnologies.com

Reflex Photonics

Kirkland, Quebec

https://reflexphotonics.com

Systel Inc.

Sugar Land, Texas

www.systelusa.com

TE Connectivity

Harrisburg, Va.

www.te.com/usa-en/home.html

Ultra Communications Inc.

Vista, Calif.

www.ultracomm-inc.com

VadaTech Inc.

Henderson, Nev.

www.vadatech.com

ZMicro

San Diego

https://zmicro.com